Backup Using Warm Standby

Important

This document outlines how to successfully deploy Appspace Core (5.2.3 or later) in a High Availability environment. For this deployment, our engineers used one of the most well known solutions in the industry: Vmware vSphere (v6.0). Please be aware that alternative High Availability solutions may require modified or additional steps to configure the final production environment and this could cause results to differ to those experienced below.

Introduction

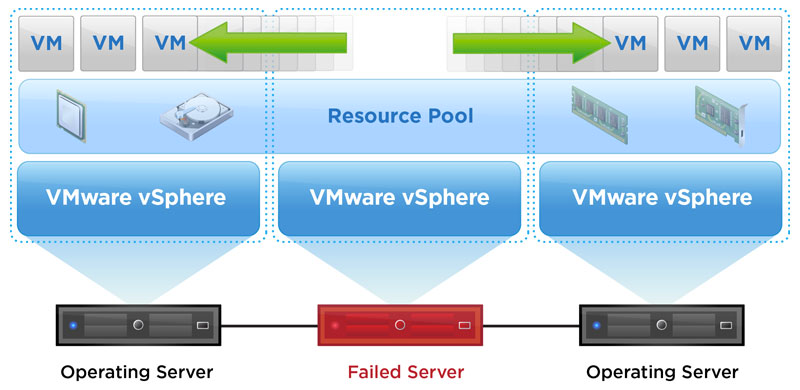

This is a sample Warm Standby implementation using VMware’s High Availability feature. This implementation is based on the clustering of multiple host servers to provide backup should a physical server within the cluster fail.

The switchover process of restarting the virtual machine on another server within the cluster happens swiftly and without the need for human intervention.

Implementation Concept

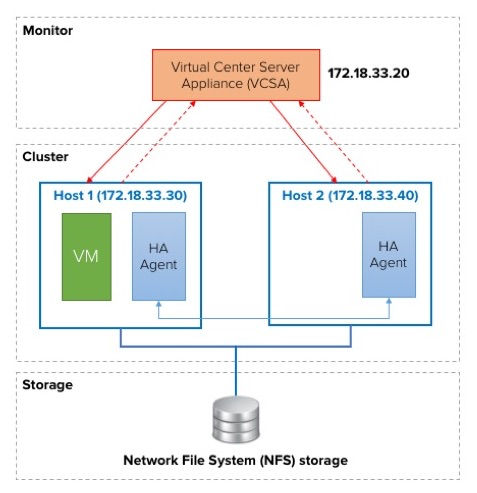

The concept of this implementation is illustrated below:

This implementation requires one server, the Virtual Center Server Appliance (VCSA), to act as the monitoring server. The VCSA monitors the cluster of hosts that act as a resource pool for the Appspace server.

The monitoring server also performs the switchover (restarting the virtual machine on another host) in the event of a failure. While it is possible to have multiple hosts acting as monitoring servers, we will be using one monitoring server to simplify this implementation.

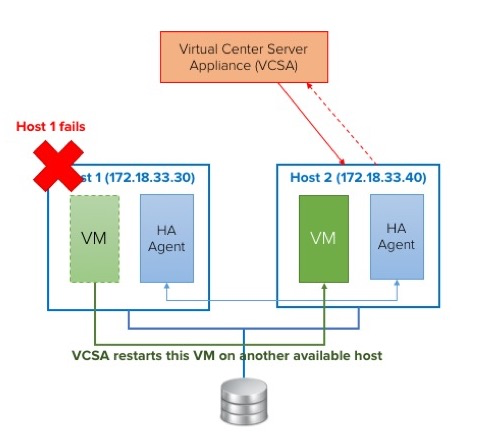

When the monitoring server detects a failure, it restarts the virtual machine on any other available host. This is illustrated below.

The switchover itself is automated and does not require human intervention. As this involves powering off the virtual machine and restarting it on another host, the time taken to perform this is dependent on the type of storage used. An SSD (solid state drive) may hasten this process as it has a higher read/write speed in comparison to a regular HDD (hard disk drive).

The table below displays the components and network configuration for this implementation. Note that this is not a component/OS recommendation.

| Component | Type | IP | OS | Note |

|---|---|---|---|---|

| VCSA Host | Physical | xxx.xx.33.20 | ESXi | VCSA installed and given IP xxx.xx.33.21 |

| Host #1 | Physical | xxx.xx.33.30 | ESXi | N/A |

| Host #2 | Physical | xxx.xx.33.40 | ESXi | N/A |

| NFS storage | Physical | xxx.xx.33.27 | Linux | Any other network-accessible storage will work |

| Appspace 5.5 | Virtual | xxx.xx.33.39 | Windows 2008 R2 | N/A |

Expectations and Restrictions

- This implementation only works for a virtualized Appspace deployment.

- As we are using a network file storage (NFS), the need for data replication will not be necessary. All data will be stored on a centralized, network-accesible datastore to ensure that in the event of a failure, data will be preserved.

Prerequisites

Hardware

- 1 x server - functions as the monitor for the cluster (VCSA).

- 2 x server - functions as a resource pool in the cluster.

- 1 x network-accessible storage - functions as the centralized storage for all hosts in the cluster.

Software

- VMware vSphere 6.0 software and license.

- VMware vSphere 6.0 HA checklist.

- VMware ESXi software and license.

- VMware VCSA software and license.

- vSphere client software (downloadable from the ESXi server).

- Appspace 5.2.3 onwards.

- 1 virtual machine created on any host, installed with the required prerequisite software. Please refer to the Platform Requirements section in the Appspace System Requirements article.

Licenses and Clients

- IT Administrator-level access to Windows Server environment (2008 or 2012) and the installed Appspace instance.

- At minimum, a valid Appspace Cloud Pro/Omni-D login credential for Appspace Installation Center download.

- Take note that we will be using two vSphere clients to access the VCSA and hosts:

- To access the VCSA (monitoring server) - vSphere Web Client.

- To access individual hosts - vSphere Client.

Using This Guide

This guide is divided into seven sections as shown below. You must complete each section in the given order, before moving onto the next section.

- Installing ESXi

- Deploying VCSA

- Sharing an NFS Datastore between Hosts

- Clustering your Hosts

- Configuring the Heartbeat Datastore

- Checking the Cluster and HA Status

- Host Failure Simulation

Note

Installation steps for third party software are not covered, however, links to installation guides are provided.

Installing ESXi

All three servers must be have VMware ESXi installed. To do so, follow the instructions on VMware vSphere 6.0 Documentation Center on Installing ESXi. These servers must be in the same network as they will all need to be able to communicate with each other.

Deploying VCSA

Once you have installed ESXi on all three servers, select one server to act as the monitoring server and deploy VCSA on that server. To do so, follow the instructions in the Deploying the vCenter Server Appliance article at the VMware vSphere 6.0 Documentation Center.

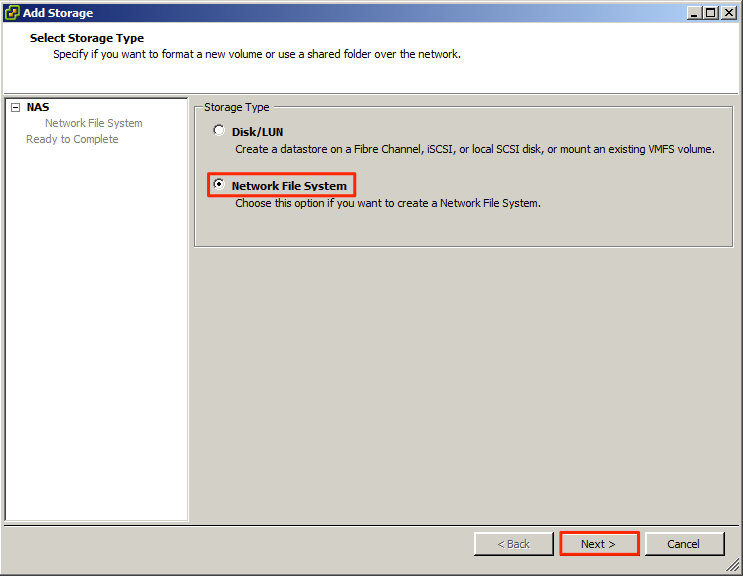

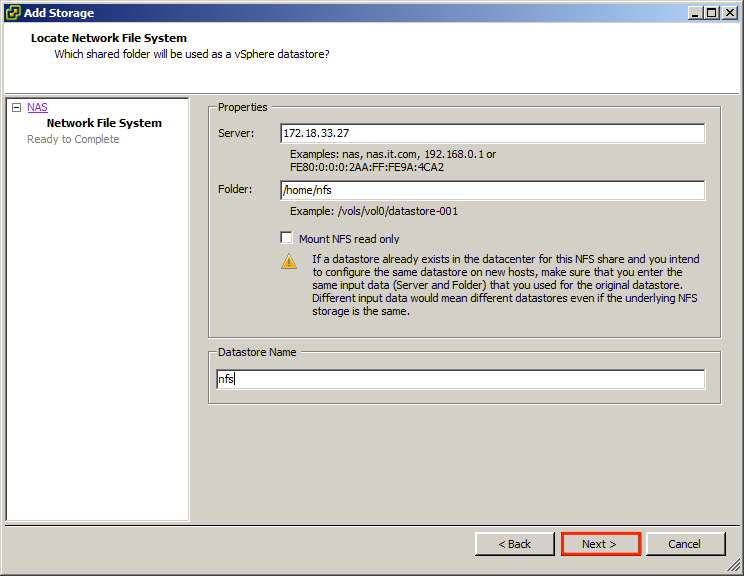

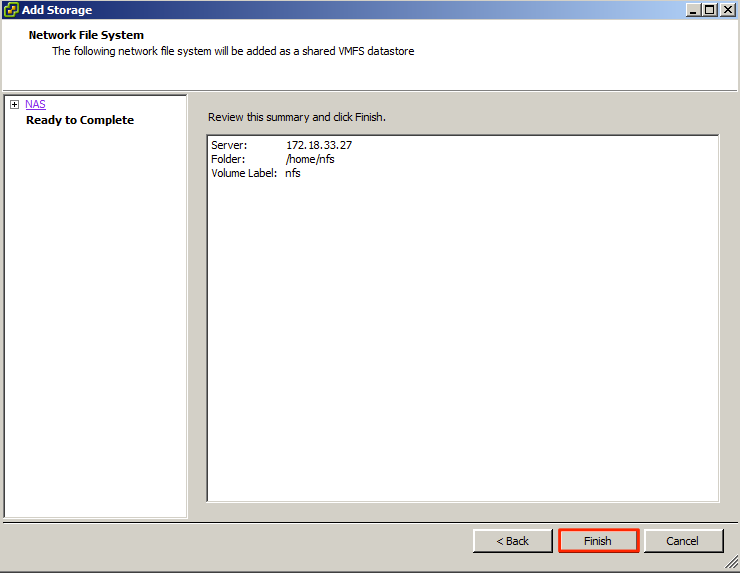

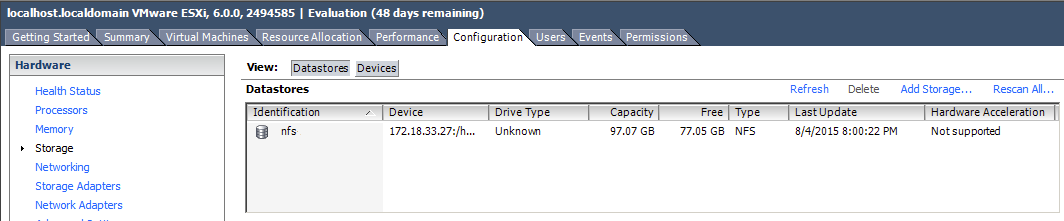

Sharing an NFS Datastore between Hosts

Important

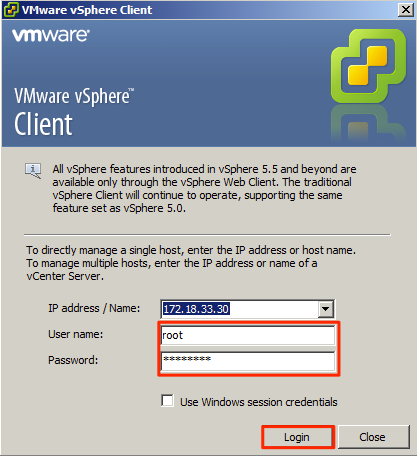

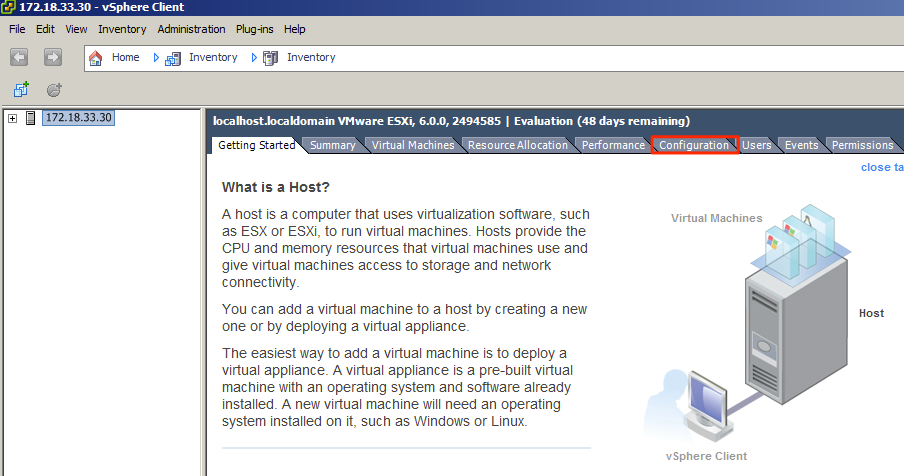

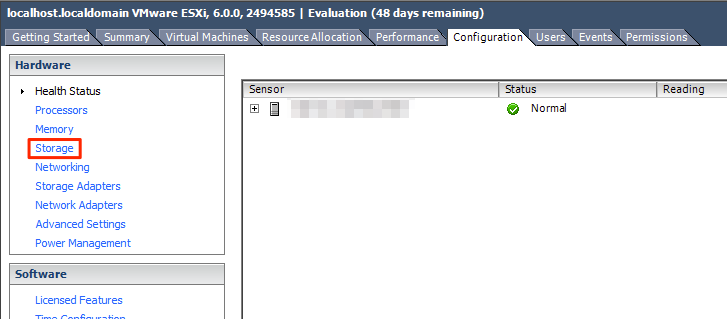

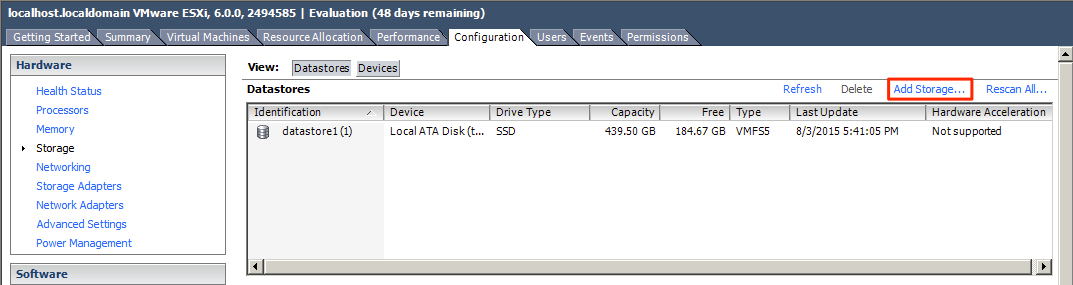

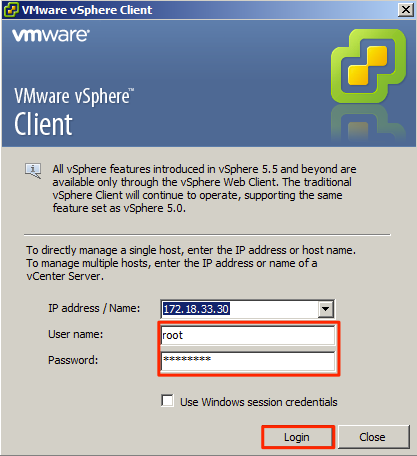

This section requires you to access the VCSA via the vSphere Client.

Configure both hosts to use the same NFS datastore to avoid the need for data replication between both hosts. You must have vSphere client installed to access the hosts individually. You will also need to perform this step on both hosts.

Important

Repeat all steps within this section on all hosts.

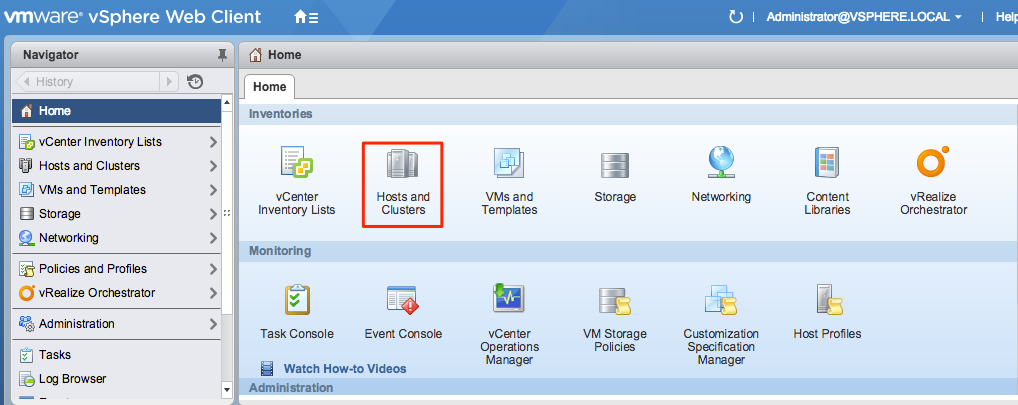

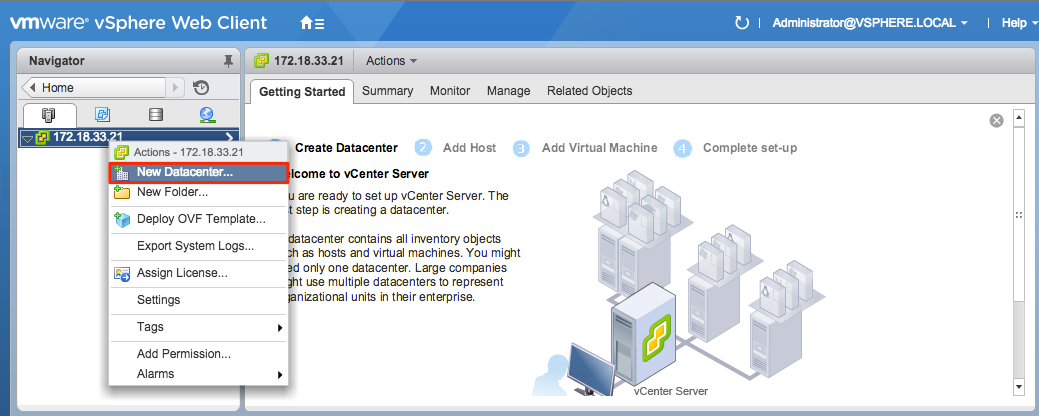

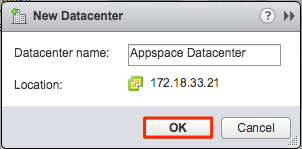

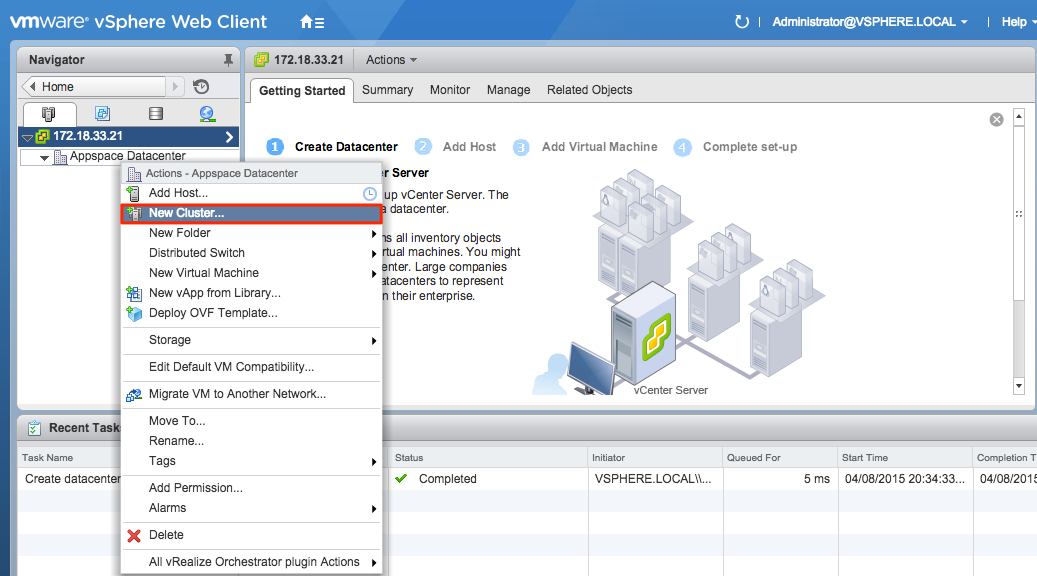

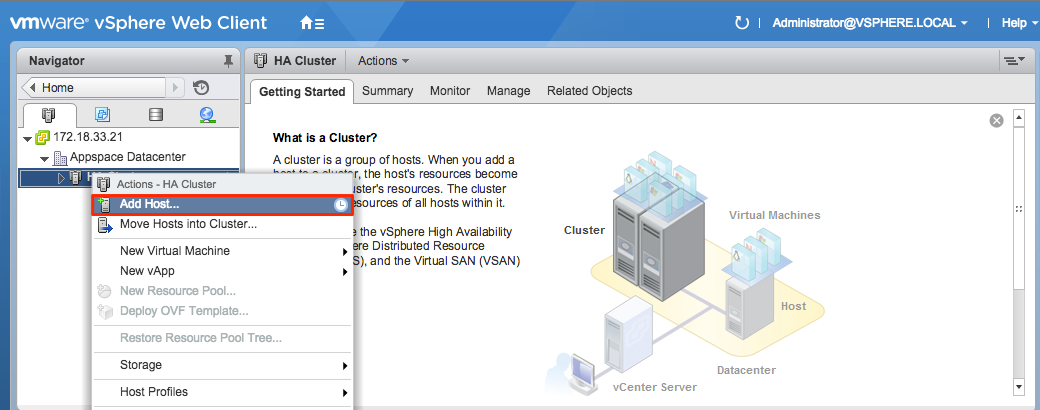

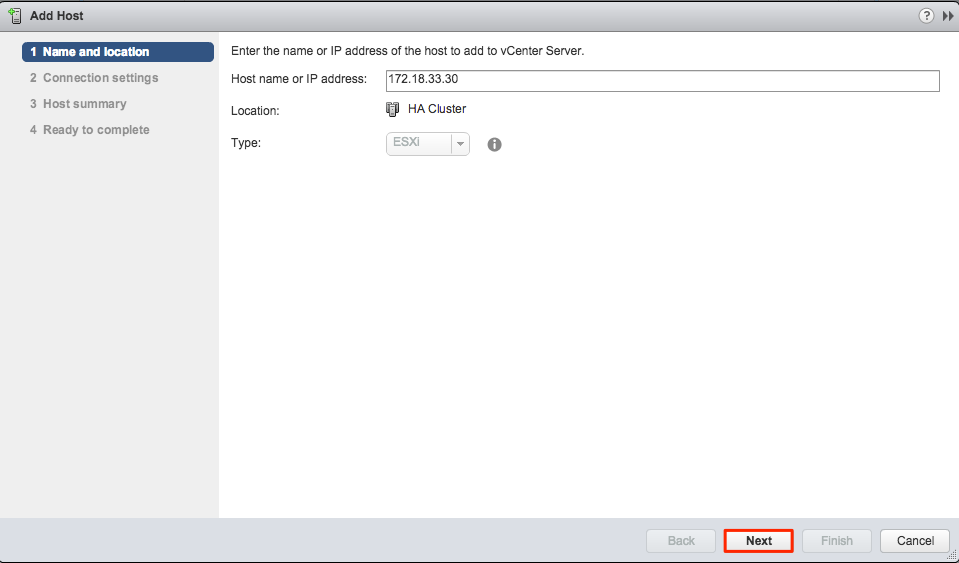

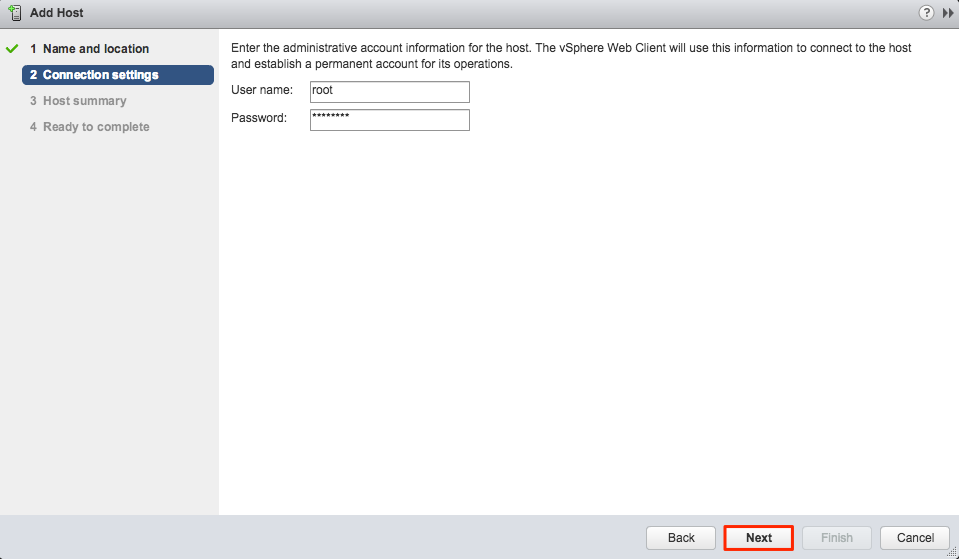

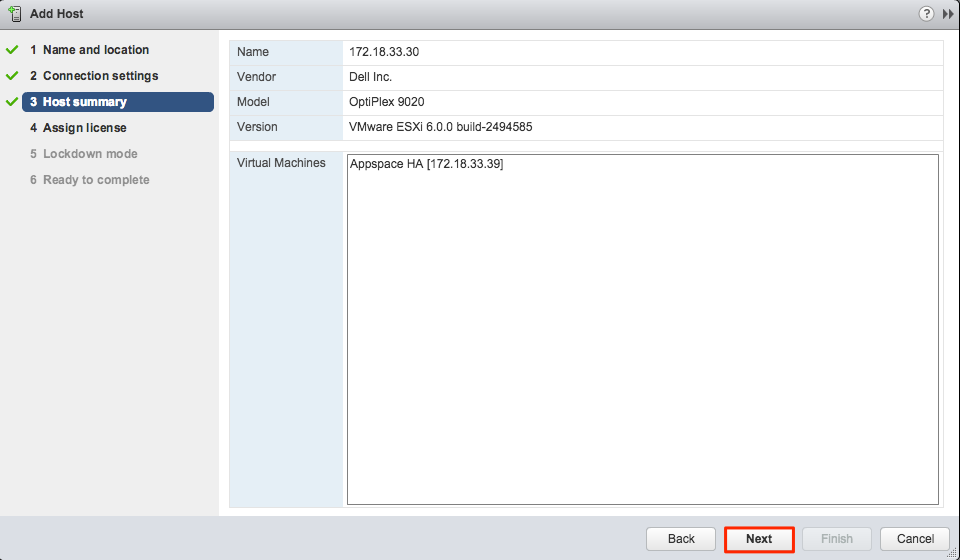

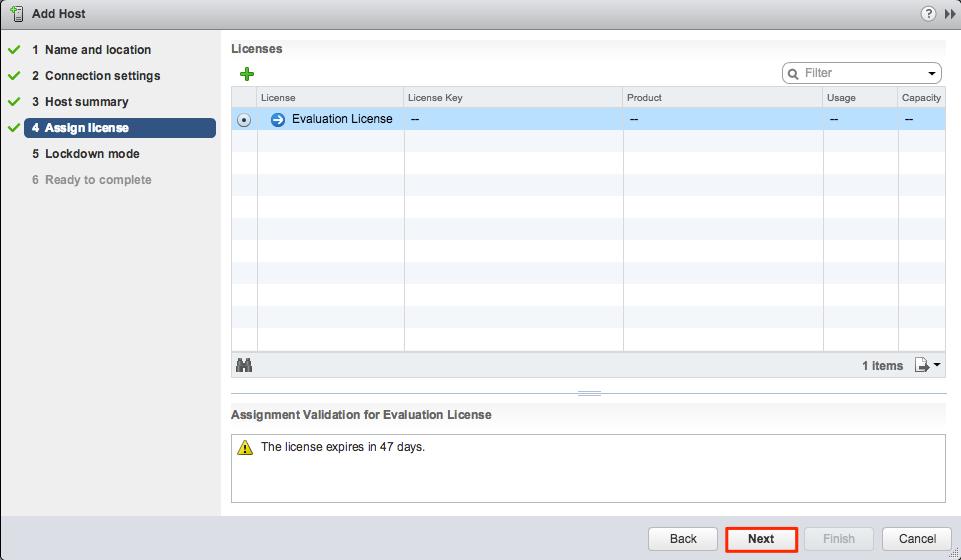

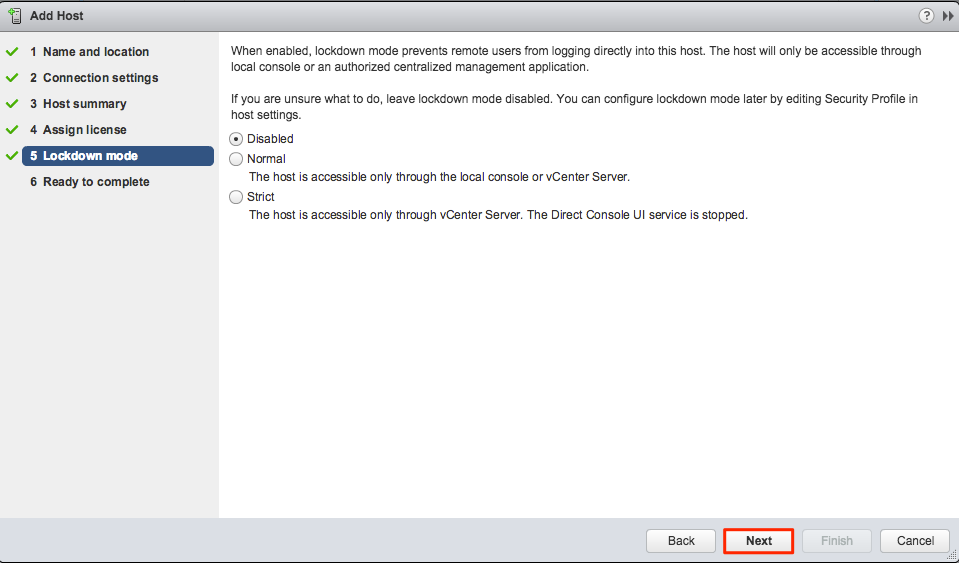

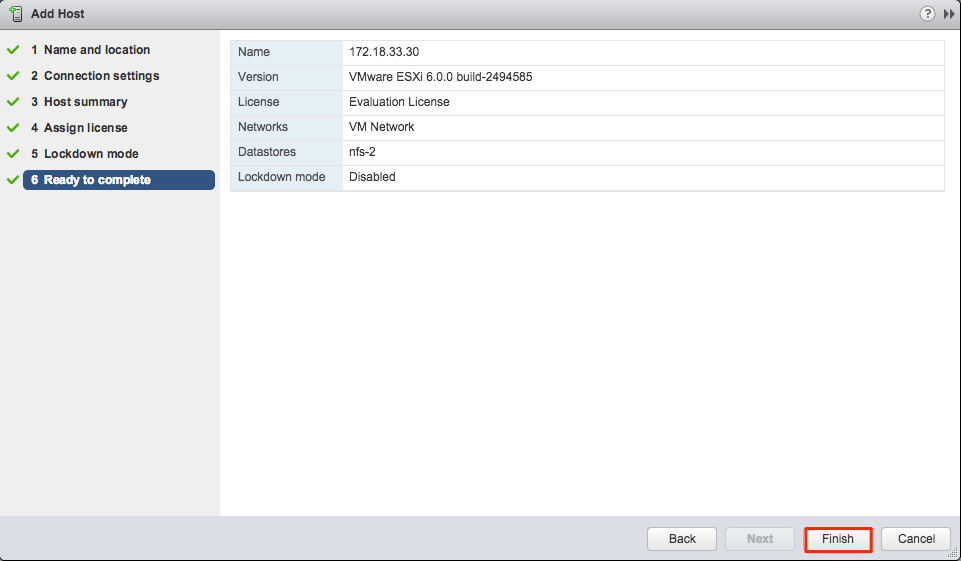

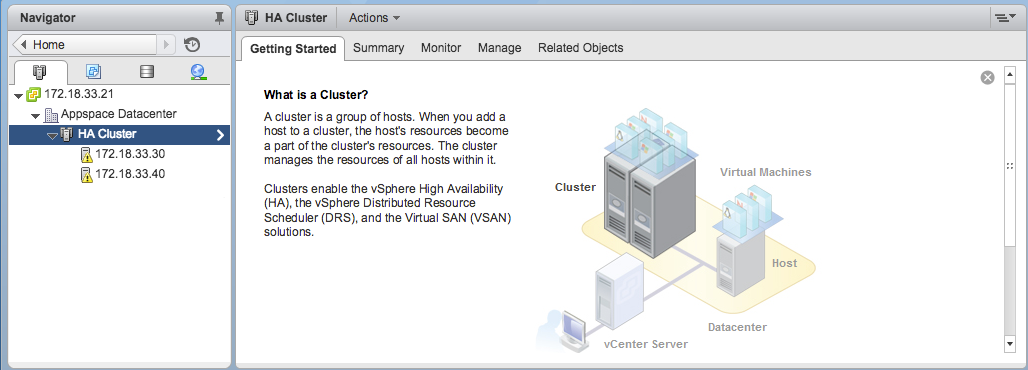

Clustering your Hosts

Important

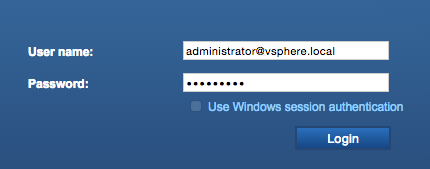

This section requires you to access the VCSA via the vSphere Web Client.

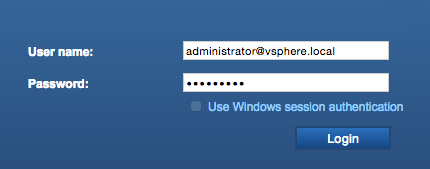

Input your credentials. This is configured during the VCSA deployment phase.

Note

You may require an additional plugin for the vSphere Web Client to work.

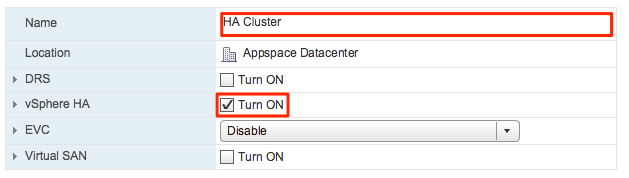

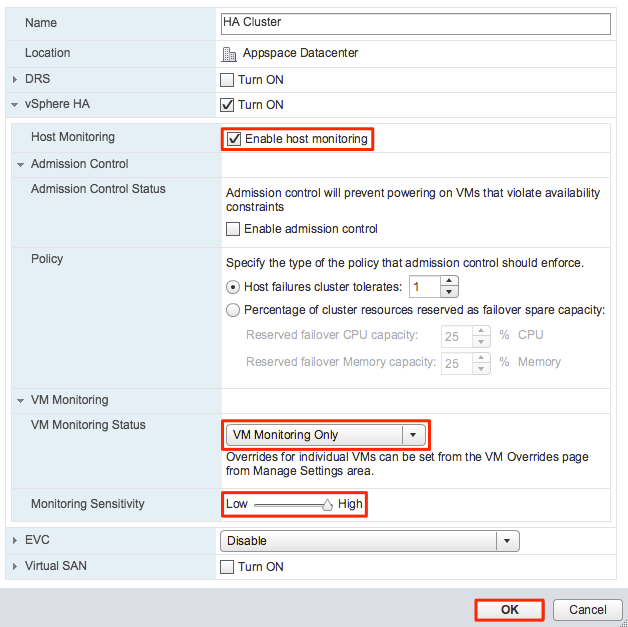

Give the cluster a name, and check the ‘Turn ON’ check box for vSphere HA. You have the option to turn on DRS which balances computing workloads within the cluster, but is not necessary for vSphere HA.

Upon turning on vSphere HA, additional configuration options become available. Check ‘Enable host monitoring’, select ‘VM Monitoring Only’ and set the monitoring sensitivity to High. Click OK when done.

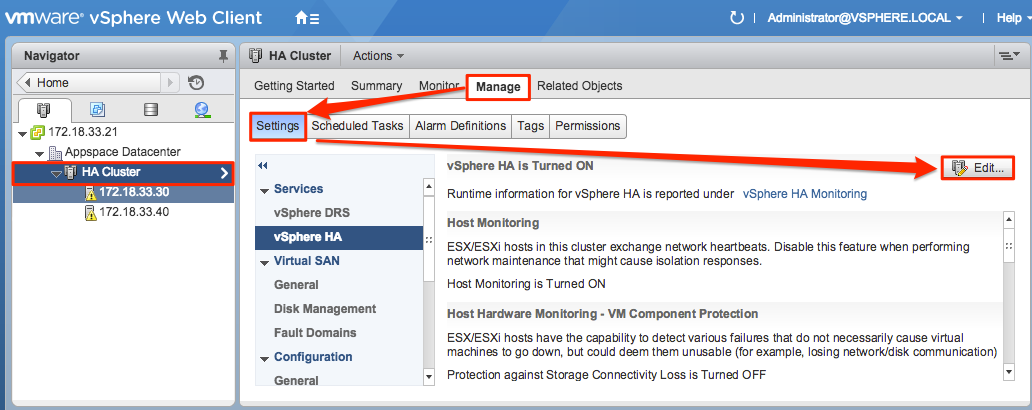

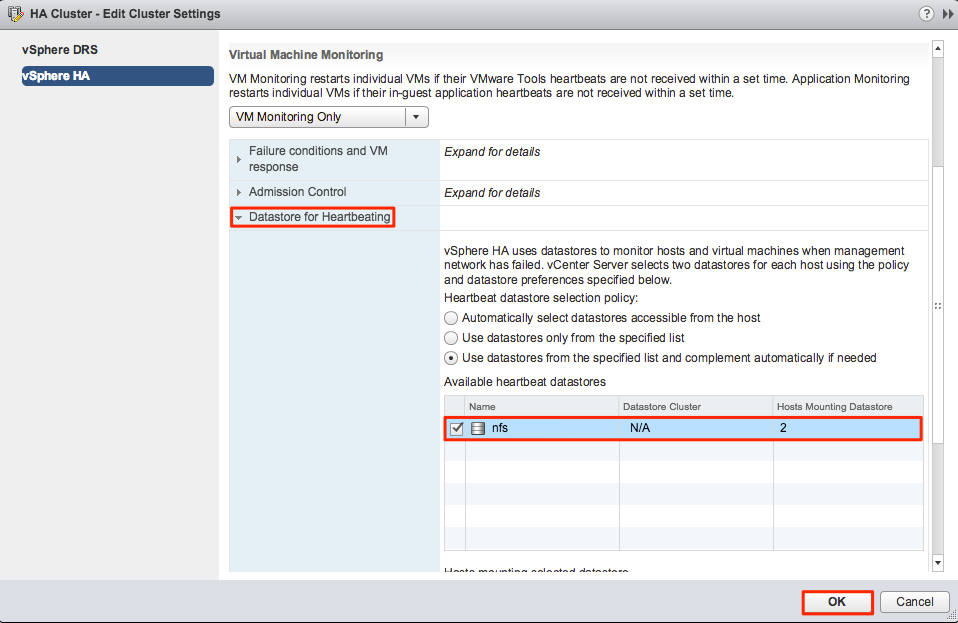

Configuring the Heartbeat Datastore

Important

This section requires you to access the VCSA via the vSphere Web Client.

Configure the heartbeat datastore so it points to the NFS datastore.

Creating the Virtual Machine and Installing Appspace

Important

This section requires you to access the VCSA via the vSphere Client.

- Install the Windows Server and Appspace.

Checking the Cluster and HA Status

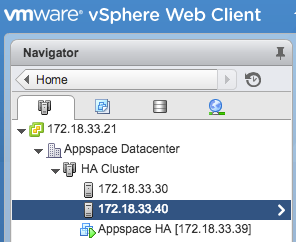

Once Appspace has been installed on a virtual machine, you can proceed to check the cluster and HA status. To do so, log into the VCSA via the vSphere Web Client as shown below.

Expand the datacenter and cluster to gain access to the hosts.

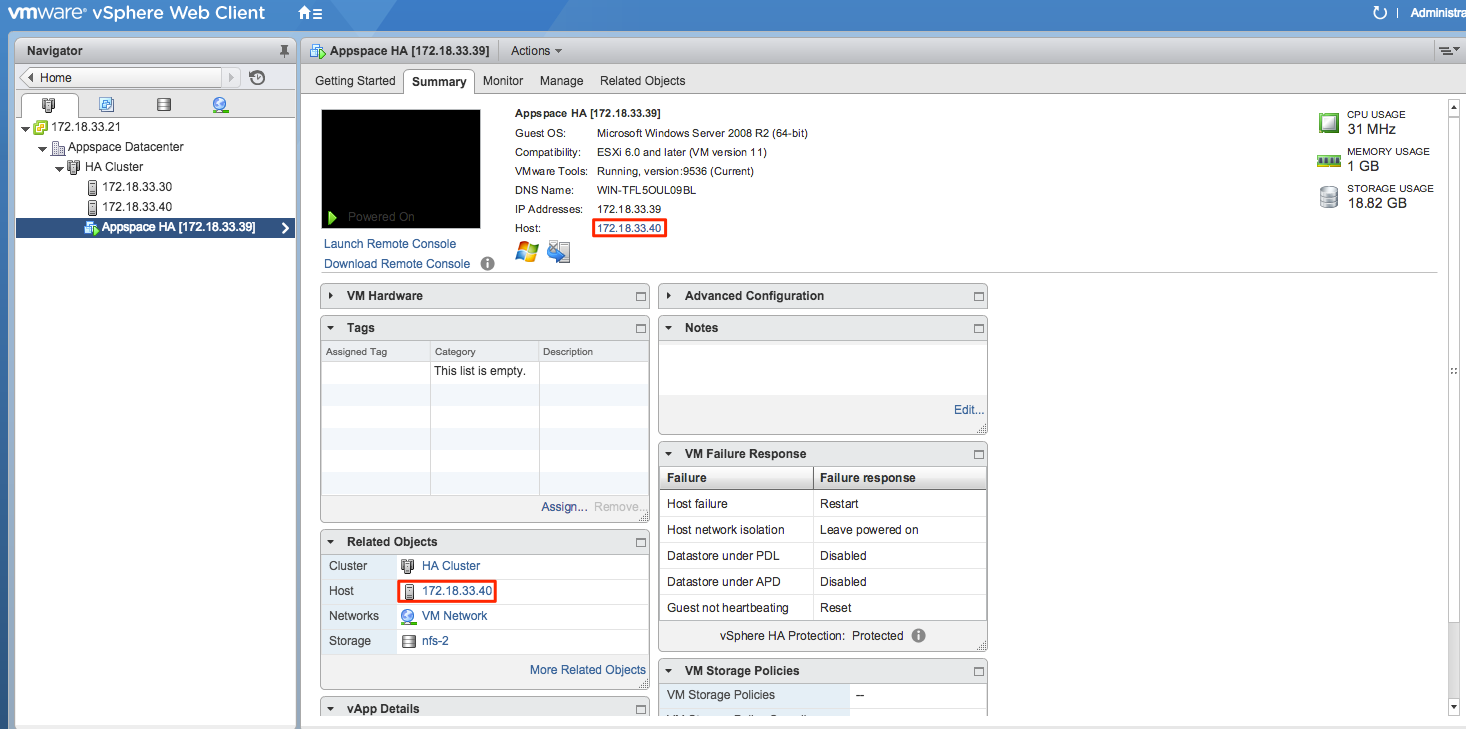

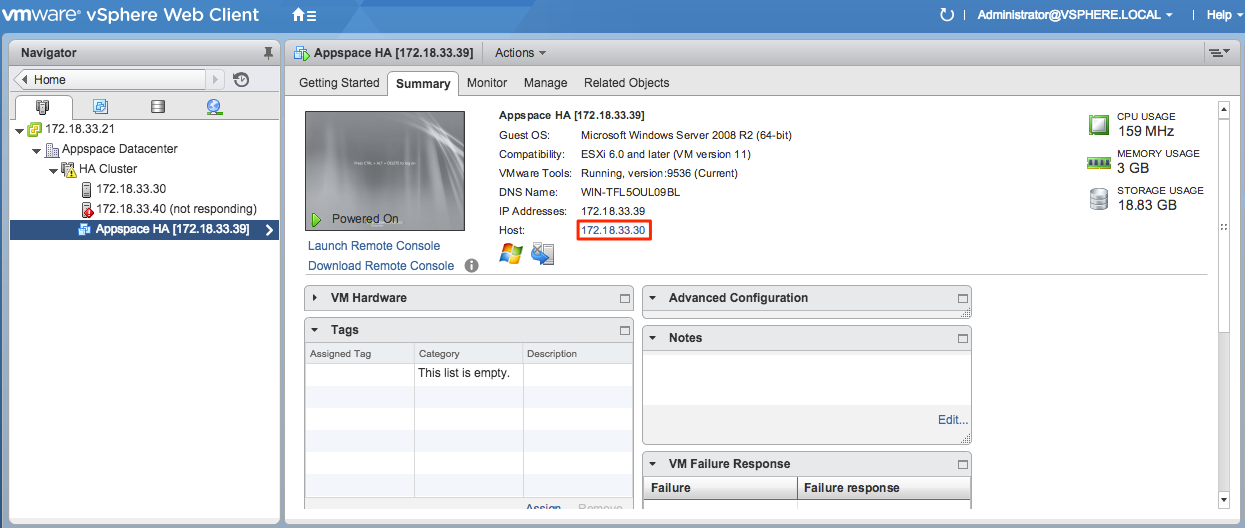

The virtual machine to view its host.

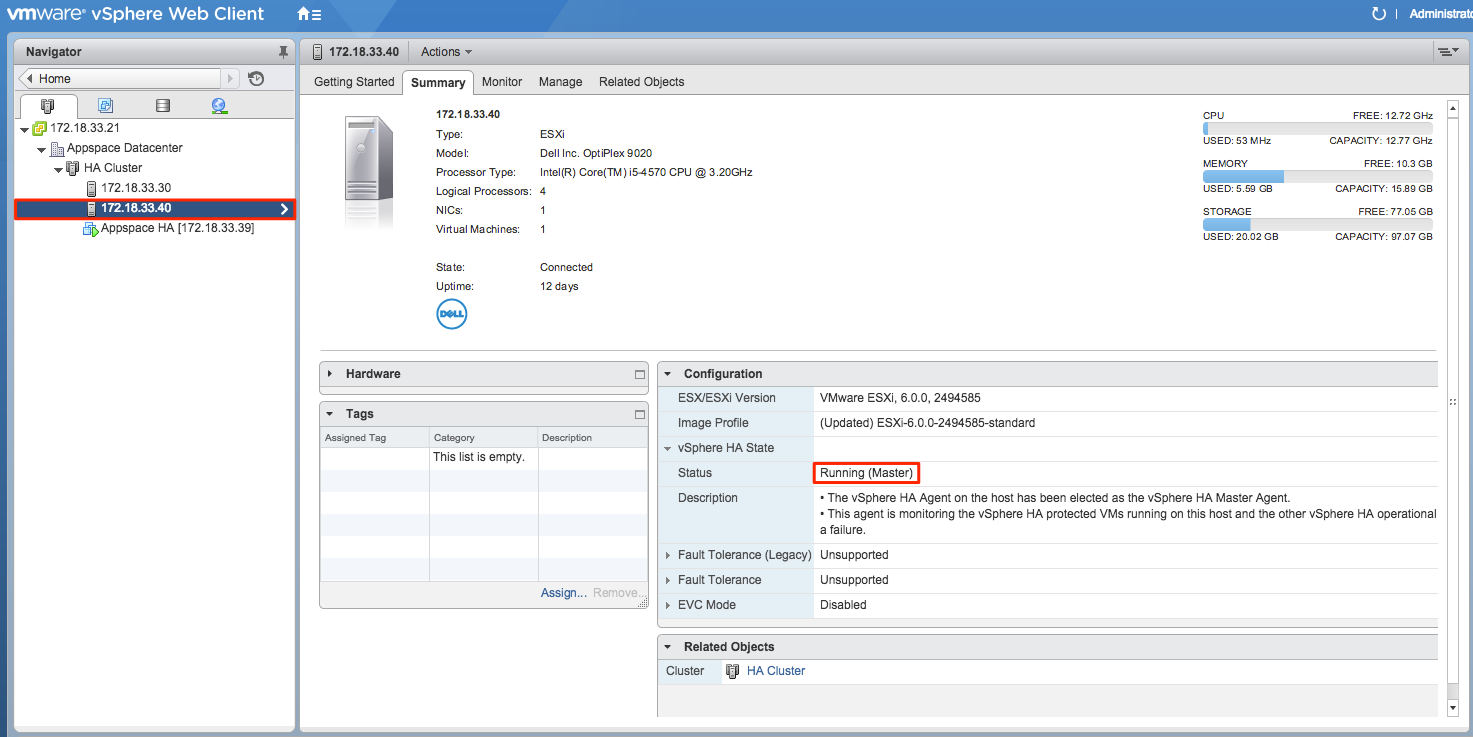

You can also select the host to view its status in the cluster. The image below shows the server 172.18.33.40 acting as the master.

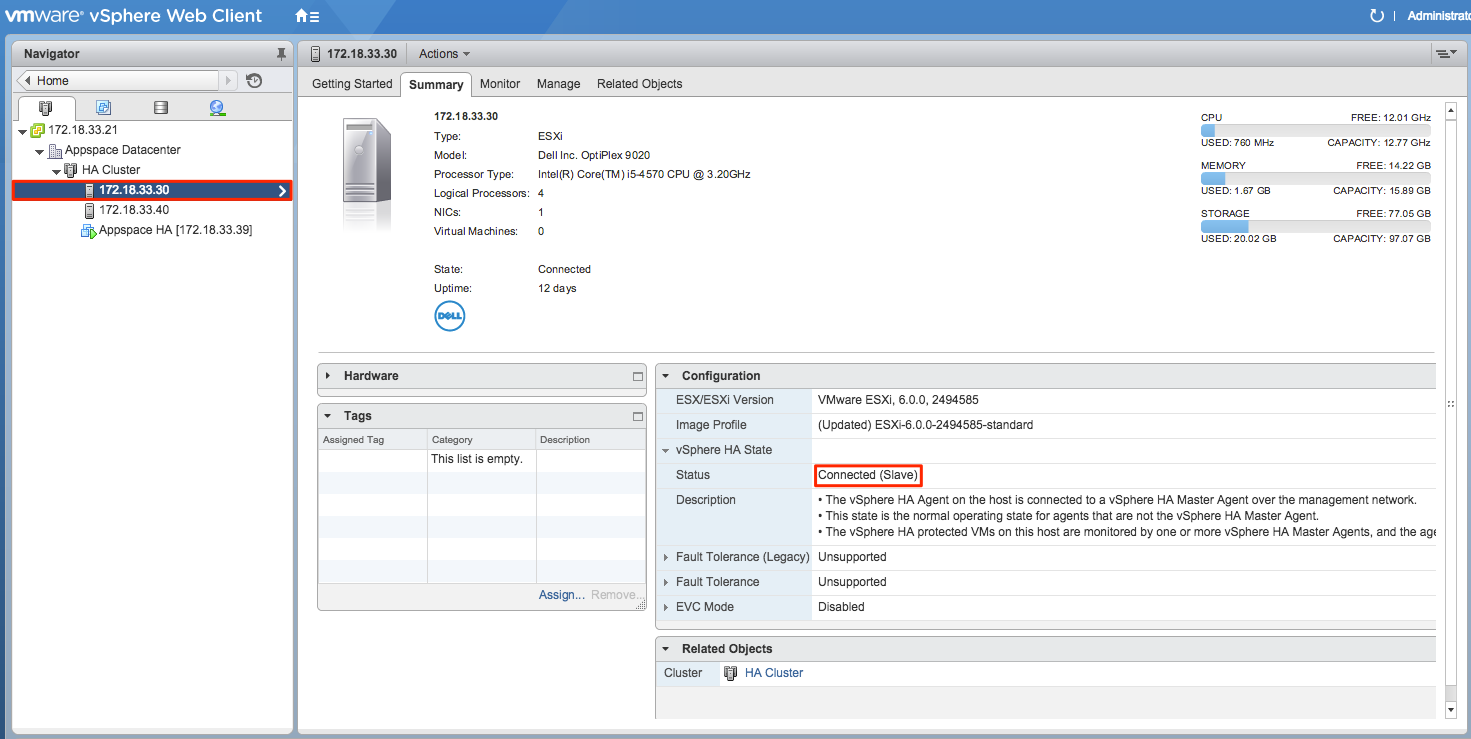

While the other server 172.18.33.30 acts as the slave within the cluster.

Host Failure Simulation

When the VCSA detects a host failure, it automatically restarts the virtual machine on any available host. An example of a simulated failure is shown below.

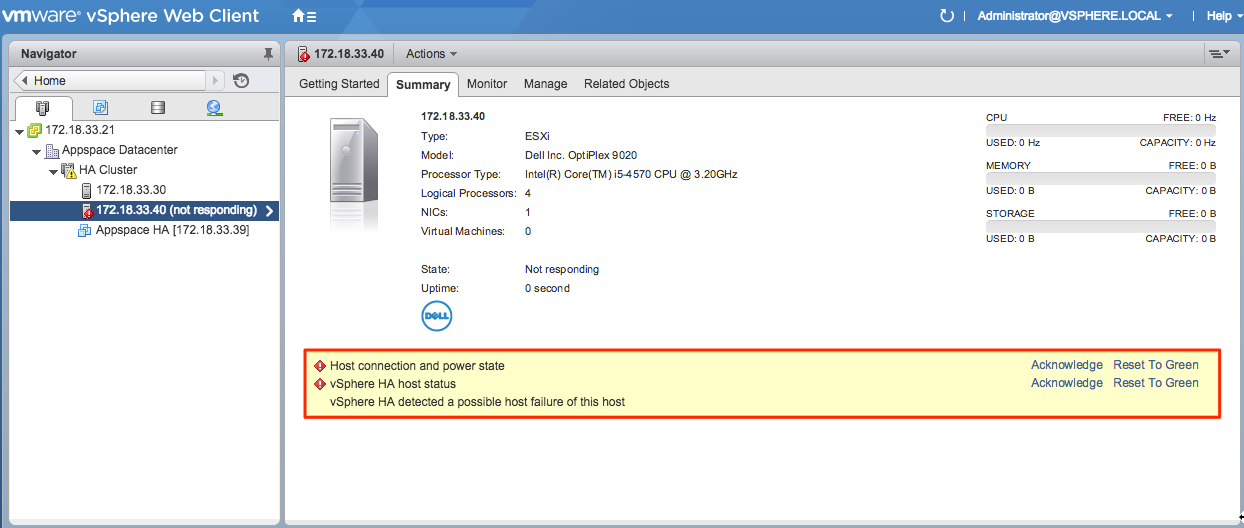

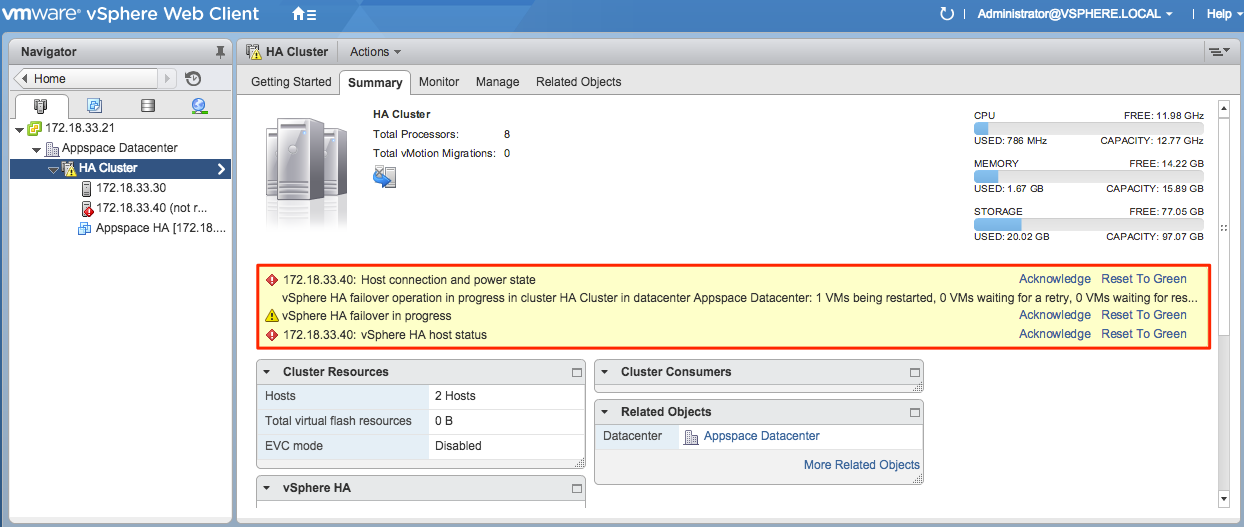

In this simulation, the master host (172.18.33.40) is no longer connected to the VCSA due to a network outage. The VCSA detects this, raises an alarm and notifies the user that failover is in progress.

After a few minutes, the Appspace virtual machine is restarted on the other available host (172.18.33.30) within the cluster.

To improve availability, this solution can be implemented with additional hosts to increase the resource pool and ensure access to the Appspace server is always available.